In 2025, the race to dominate next-generation AI chip architectures has never been more intense. As artificial intelligence scales from cloud data centers to edge devices, hardware innovation is redefining the limits of performance, efficiency, and scalability. The battlefront is now clearly drawn between NVIDIA’s Blackwell chip, AMD’s MI300 AI chip, and a growing wave of custom ASICs developed by hyperscalers and startups.

So, who really leads the AI hardware game in 2025 — and why? Let’s dive deep into the architectures, benchmarks, and trade-offs that define the future of AI acceleration.

1. NVIDIA Blackwell Chip: The New Titan of AI Acceleration

NVIDIA’s Blackwell architecture succeeds the Hopper generation, pushing GPU design toward unprecedented performance-per-watt and mixed-precision flexibility. Built on advanced TSMC 3nm technology and featuring multi-die GPU modules interconnected with NVIDIA’s NVLink 6, Blackwell delivers exponential gains in large language model (LLM) training and inference.

Key Highlights:

- Up to 20 petaflops of FP4 performance per GPU

- Advanced tensor cores supporting FP8/FP4 precision for optimal AI workload balance

- Unified memory architecture for massive model scaling beyond 1 trillion parameters

- Deep integration with NVIDIA DGX GB200 systems and Grace CPU nodes for end-to-end HPC and AI synergy

Verdict: NVIDIA remains the market leader in AI chip architecture ecosystems, with unmatched software support via CUDA, TensorRT, and the NVIDIA AI Enterprise stack.

2. AMD MI300 AI Chip: The Challenger with a Hybrid Edge

AMD’s MI300 series, especially the MI300X, is designed to rival NVIDIA’s dominance with a focus on memory bandwidth, energy efficiency, and open-source flexibility.

Architecture Overview:

- 3D-stacked APU design, combining CPU and GPU chiplets in a single package

- Up to 192 GB of HBM3 memory and 5.3 TB/s memory bandwidth

- Optimized for inference, training, and HPC workloads simultaneously

- Fully compatible with ROCm (Radeon Open Compute) and PyTorch / TensorFlow integrations

In recent benchmarks, the AMD MI300 AI chip has demonstrated competitive training performance against NVIDIA’s H100 and early Blackwell previews — particularly in workloads emphasizing memory-bound operations.

Verdict: AMD’s hybrid approach offers excellent performance-to-cost and a strong alternative for enterprises seeking flexibility beyond the CUDA ecosystem.

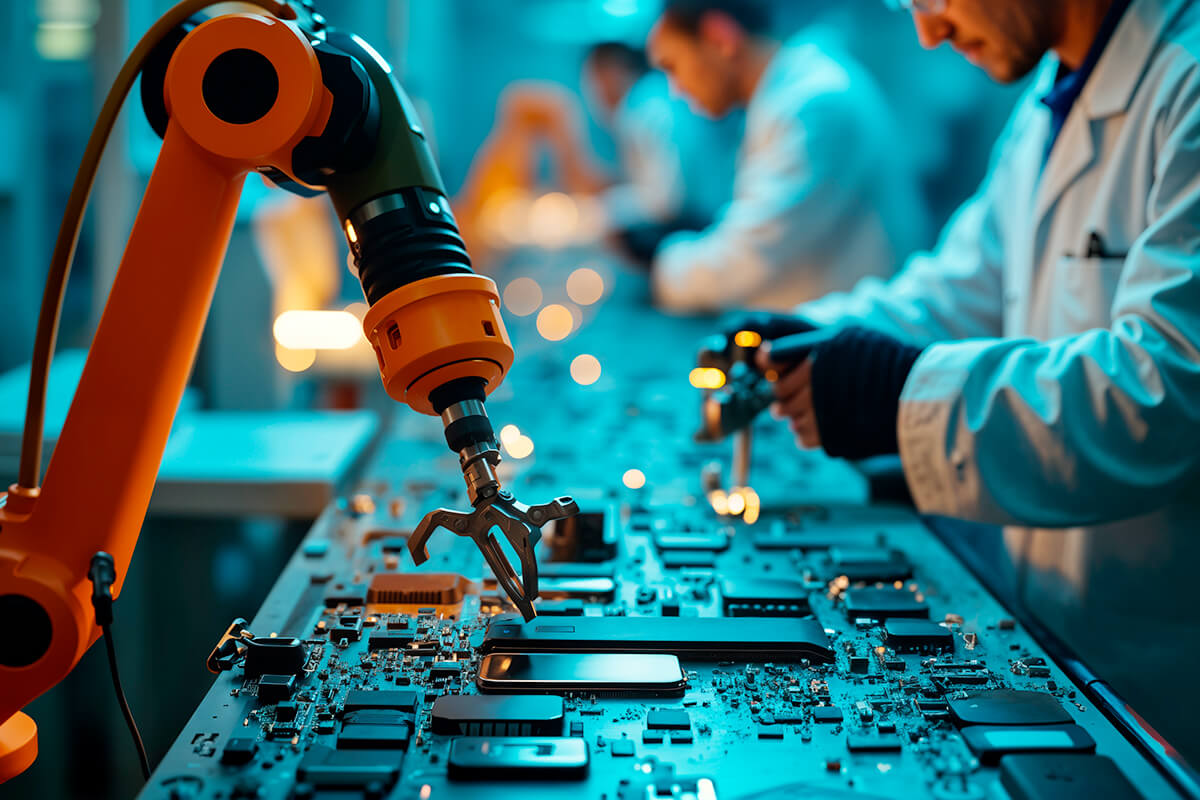

3. Custom ASICs and Emerging AI Chip Architectures

While GPUs dominate, custom AI ASICs are reshaping the competitive landscape in 2025. Giants like Google (TPU v6), Amazon (Trainium 2), and Tesla’s Dojo D1/D2 are developing chips tuned for specific workloads — often outperforming general-purpose GPUs in efficiency.

Emerging Trends in Next Generation AI Chip Architectures 2025:

- Domain-specific accelerators (DSAs): Tailored for transformer and diffusion model workloads

- Chiplet-based designs: Modular architectures allow flexible scaling and reduced fabrication costs

- Photonics and memory-on-package (MOP): Reducing interconnect latency for next-gen AI chips

- RISC-V AI cores: Gaining traction for lightweight inference at the edge

Startups like Cerebras, Graphcore, and Tenstorrent continue to innovate with wafer-scale engines and reconfigurable compute fabrics that rival NVIDIA and AMD on certain workloads

4. The Market Outlook: AI Hardware in 2025 and Beyond

The next-generation AI architectures of 2025 reflect a clear trend — the convergence of GPUs, CPUs, and ASICs into heterogeneous computing ecosystems.

By 2030, analysts forecast:

- A $350 billion AI chip market, with over 40% share from hyperscaler in-house ASICs

- Rising adoption of edge AI hardware for autonomous vehicles, robotics, and smart manufacturing

- Growing collaboration between semiconductor foundries and AI startups for domain-optimized designs

The race is not just about speed anymore — it’s about energy efficiency, scalability, and software ecosystem maturity.

Conclusion: The Real Winner in 2025

The crown for AI hardware leadership in 2025 depends on perspective:

Ultimately, the future belongs to hybrid AI architectures — where GPUs, CPUs, and ASICs coexist to power the world’s next wave of intelligent systems.

- NVIDIA Blackwell chip dominates large-scale AI training with a mature software stack

- AMD MI300 AI chip delivers balanced performance with better cost-efficiency and open-source freedom

- Custom ASICs push the boundaries of efficiency and specialization for hyperscale AI infrastructure