Smart Logging blends IoT sensors, AI chips, and edge analytics to capture, clean, enrich, and act on data the moment it’s created. It cuts latency, shrinks cloud costs, boosts availability, and unlocks predictive insights for factories, logistics, utilities, energy, and aerospace.

Why Smart Logging Is Surging Now ?

The world is adding billions of connected sensors, creating a continuous stream of telemetry that traditional, batch data loggers can’t handle alone. Recent industry trackers estimate 21.1B connected IoT devices in 2025, on track for 39B by 2030 (≈13.2% CAGR)—a surge explicitly tied to AI-driven use cases.

On the compute side, Edge AI is scaling fast to analyze this data in situ: the market is projected to grow from ~$20.8B (2024) to $66.5B by 2030 (≈21.7% CAGR), driven by real-time processing needs and privacy constraints. Meanwhile, the Industrial IoT (IIoT) stack that transports and manages operational data is forecast to reach ~$1.69T by 2030 (≈23.3% CAGR from 2025), reflecting strong adoption in manufacturing, energy, and logistics.

Even the “classic” data logger category is expanding as it modernizes: analysts see the global data logger market rising from ~$10.27B (2024) to ~$15.62B by 2030 (≈7.2% CAGR).

What Is “Smart Logging”?

Smart Logging is the evolution of data logging from simple timestamped capture to continuous, context-aware intelligence. It uses:

- IoT sensors (temperature, vibration, pressure, acoustics, GNSS, power, camera/vision)

- AI-optimized chips at the edge for low-latency inference

- Stream pipelines to normalize, enrich, and encrypt data

- Edge+Cloud orchestration for storage, analytics, and lifecycle management

Core Capabilities

- Edge inference: Compress data volumes by filtering noise and extracting features/events locally.

- Real-time anomaly detection: Detect drift, outliers, and thresholds before a fault escalates.

- Predictive maintenance: Combine condition indicators and usage context to forecast failures. The predictive maintenance market alone is projected to grow at ~27–35% CAGR to 2029/2030, underscoring enterprise ROI. (MarketsandMarkets)

- Policy-aware retention: Encrypt, sign, and route only what’s needed to cloud archives to reduce cost and risk.

- APIs & open formats: Publish clean, labeled, queryable streams to MES/ERP/SCADA, data lakes, and BI tools. Shape

Smart Logging Architecture by AIChips

AMD’s MI300 series, especially the MI300X, is designed to rival NVIDIA’s dominance with a focus on memory bandwidth, energy efficiency, and open-source flexibility.

Connectivity & Control

- Adaptive protocols (MQTT, OPC UA, CoAP) with QoS and store-and-forward

- Dynamic bandwidth shaping: prioritize alerts over routine metrics

- Over-the-air (OTA) policy: rollout models and logging rules to fleets safely

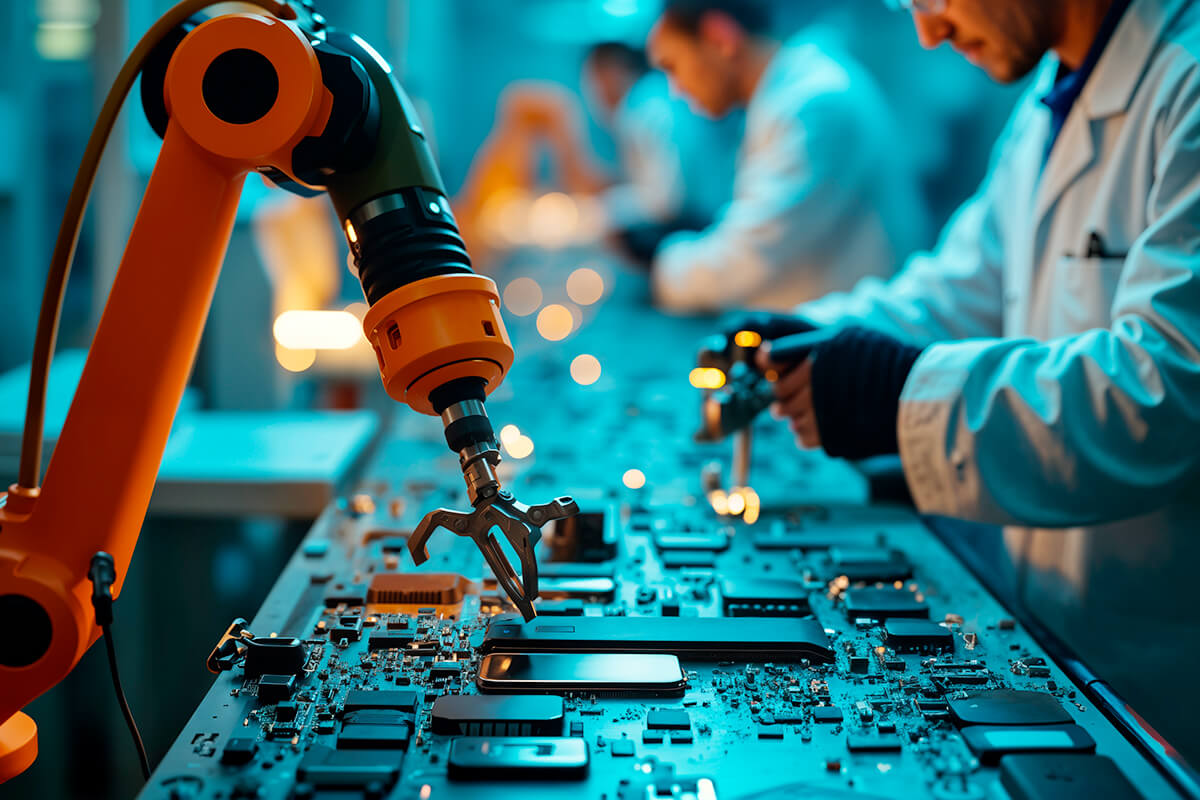

Edge Layer (AIChip-Powered)

- Ultra-low-power inference on vibration/temperature/acoustic/GNSS/video

- On-device DSP + ML: FFTs, MFCCs, spectral kurtosis, seasonal decomposition

- Event gating: retain peaks, anomalies, and KPI summaries rather than raw firehose

- Zero-trust posture: secure boot, encrypted storage, signed firmware updates

Cloud & Analytics

- Time-series lakes + vector stores for signals and embeddings

- Auto-labeling with weak supervision to accelerate model retraining

- Dashboards & APIs for maintenance, quality, and operations teams

High-Impact Use Cases

Manufacturing

- Bearing & motor health via vibration + current signatures

- Quality drift detection from line-side cameras and sensors

- Traceability: signed logs for ISO/IEC compliance

Energy & Utilities

- Transformer/feeder monitoring (temperature, harmonics, partial discharge)

- Grid edge forecasting to balance DERs and reduce losses

Cold Chain & Pharma

- GxP-aligned temperature/humidity logging with audit trails

- Spoilage prediction from thermal profiles and openings data

Mobility & Logistics

- Condition-based maintenance for fleets

- Shock/tilt logging for high-value shipments

- Route-aware analytics blending GNSS and sensor context

How AIChips Delivers

- AI-Optimized Chipsets: Purpose-built for on-device ML with secure enclaves and accelerators.

- Model Library: Pretrained anomaly, RUL, and sensor-fusion models fine-tuned to your assets.

- Open Integration: Connectors for MQTT/OPC UA, data lakes, and leading observability stacks.

- Lifecycle Automation: Fleet-safe OTA, model rollbacks, drift alerts, and human-in-the-loop labeling

- Time-to-Value: Proof-of-Value playbooks that move from pilot to fleet deployment in weeks, not quarters

Frequently Asked (Technical) Questions

1. Do we need constant internet?

FPGAs are reprogrammable, making them flexible and cost-effective, while ASICs are fixed-function and efficient only for mass production.

2. Can we keep raw data for R&D?

Yes—policy can mirror a fraction of raw windows to object storage while the rest is event-only.

3. How are models updated?

Secure OTA with staged rollouts and rollback on anomaly/latency regressions.

Conclusion

Smart Logging is the missing link between ubiquitous sensors and actionable outcomes. With edge AI, you analyze where the data is born, slash waste, and deploy predictions that move core KPIs. As IoT, Edge AI, and IIoT spending accelerates this decade, organizations that operationalize Smart Logging now will own the uptime, cost, and quality curves tomorrow.